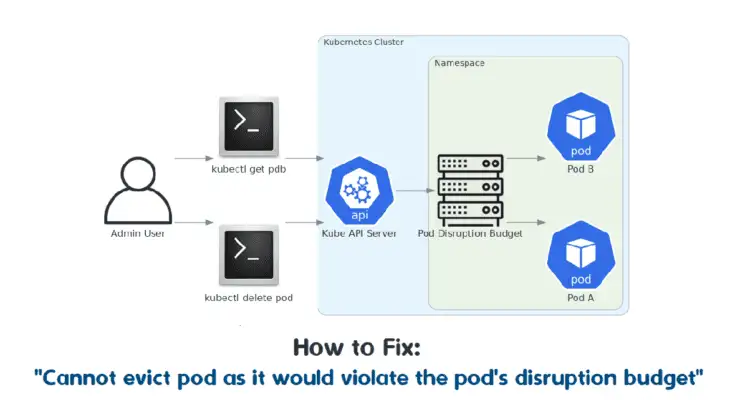

When managing Kubernetes clusters, you might encounter an error stating, “Cannot evict pod as it would violate the pod’s disruption budget.” This error occurs when a Pod Disruption Budget (PDB) prevents a pod’s eviction to ensure your application’s availability during disruptions such as node drains or upgrades.

In this guide, we will explain what a PDB is, why this error occurs, and how to resolve it with examples.

Table of Contents

Understanding Pod Disruption Budget (PDB)

A Pod Disruption Budget (PDB) is a Kubernetes resource that specifies the minimum number of pods that must be available at any time during voluntary disruptions. PDBs are used to ensure that your application remains available and doesn’t suffer from too many disruptions simultaneously.

Example PDB Configuration

apiVersion: policy/v1

kind: PodDisruptionBudget

metadata:

name: my-app-pdb

spec:

minAvailable: 2

selector:

matchLabels:

app: my-appIn this example, the PDB ensures that at least two pods with the label app: my-app must be available at any given time.

Why the Error Occurs?

The error “Cannot evict pod as it would violate the pod’s disruption budget” occurs when an eviction attempt reduces the number of available pods below the specified threshold in the PDB. This is a protective measure to prevent significant disruption to your application.

Steps to Fix the Error

Step 1: First, review the current PDB configuration to understand the constraints it imposes. You can list PDBs using the following command.

# kubectl get pdbStep 2: To get details about a specific PDB.

# kubectl describe pdb my-app-pdbStep 3: If the number of available pods is below the minAvailable threshold, you can temporarily scale up your deployment to allow for more pods to be available during the eviction.

For example, if you have a deployment named my-app, you can scale it up.

# kubectl scale deployment my-app --replicas=5This action increases the number of pods, making it possible to evict one without violating the PDB.

Step 4: You can edit the PDB configuration if you need to change the PDB constraints. For instance, you might want to temporarily lower the minAvailable value.

apiVersion: policy/v1

kind: PodDisruptionBudget

metadata:

name: my-app-pdb

spec:

minAvailable: 1

selector:

matchLabels:

app: my-appNow, Apply the updated PDB configuration.

# kubectl apply -f my-app-pdb.yamlStep 5: Ensure that any planned evictions or maintenance activities consider the PDB constraints. You can cordon a node to prevent new pods from being scheduled there, drain the node while respecting PDBs, and uncordon the node after maintenance.

# kubectl cordon node-name

# kubectl drain node-name --ignore-daemonsets --delete-local-data

# kubectl uncordon node-nameReal-World Example Scenario to Fix the Evict Pod Error

Imagine you have a deployment with three replicas and a PDB that requires at least two replicas to be available.

Step 1: Create a deployment YAML file with three replicas.

apiVersion: apps/v1

kind: Deployment

metadata:

name: my-app

spec:

replicas: 3

selector:

matchLabels:

app: my-app

template:

metadata:

labels:

app: my-app

spec:

containers:

- name: my-app-container

image: nginx:latest

ports:

- containerPort: 80Apply the deployment.

# kubectl apply -f my-app-deployment.yamlStep 2: Check the deployment status to ensure all pods are running.

# kubectl get deploymentsOutput.

NAME READY UP-TO-DATE AVAILABLE AGE

my-app 3/3 3 3 1dCheck the status of the pods.

# kubectl get pods -l app=my-appOutput:

NAME READY STATUS RESTARTS AGE

my-app-3cxegjgn6y 1/1 Running 0 19d

my-app-0ntddoruq1 1/1 Running 0 2d

my-app-infggjw1n9 1/1 Running 0 3dStep 3: Create a PDB YAML file to ensure at least two replicas are available.

apiVersion: policy/v1

kind: PodDisruptionBudget

metadata:

name: my-app-pdb

spec:

minAvailable: 2

selector:

matchLabels:

app: my-appApply the PDB.

# kubectl apply -f my-app-pdb.yamlOutput.

poddisruptionbudget.policy/my-app-pdb createdStep 4: List the nodes in your cluster to choose a node to drain.

# kubectl get nodesOutput.

NAME STATUS ROLES AGE VERSION

node-1 Ready master 22d v1.24.1

node-2 Ready worker 22d v1.25.3

node-3 Ready master 14d v1.24.1Step 5: Attempt to drain a node (replace node-1 with the actual node name).

# kubectl drain node-1 --ignore-daemonsets --delete-local-dataIf this results in an error, it indicates that the eviction would violate the PDB.

error: Cannot evict pod as it would violate the pod's disruption budget.Step 6: Scale up the deployment to ensure enough pods are available.

# kubectl scale deployment my-app --replicas=4Step 7: Verify the scaled deployment.

# kubectl get deploymentsOutput.

NAME READY UP-TO-DATE AVAILABLE AGE

my-app 4/4 4 4 2dStep 8: Check the status of the pods.

# kubectl get pods -l app=my-appOutput.

NAME READY STATUS RESTARTS AGE

my-app-3cxegjgn6y 1/1 Running 0 19d

my-app-0ntddoruq1 1/1 Running 0 2d

my-app-infggjw1n9 1/1 Running 0 3d

my-app-t7ulz8lbex 1/1 Running 0 4dStep 9: After scaling up, drain the node again (replace node-1 with the actual node name).

# kubectl drain node-1 --ignore-daemonsets --delete-local-dataOutput.

node/node-1 cordoned

WARNING: ignoring DaemonSet-managed Pods: kube-system/kube-proxy-3cxegjgn6y, kube-system/weave-net-3cxegjgn6y

evicting pod my-app-3cxegjgn6y

pod/my-app-3cxegjgn6y evicted

node/node-1 drainedStep 10: Once the maintenance or node draining is complete, you can scale the deployment back to its original size if desired.

# kubectl scale deployment my-app --replicas=3Step 11: Verify the scaled-back deployment.

# kubectl get deploymentsOutput.

NAME READY UP-TO-DATE AVAILABLE AGE

my-app 3/3 3 3 2dStep 12: Check the status of the pods.

# kubectl get pods -l app=my-appOutput.

my-app-3cxegjgn6y 1/1 Running 0 19d

my-app-0ntddoruq1 1/1 Running 0 2d

my-app-infggjw1n9 1/1 Running 0 3dConclusion

Managing disruptions in Kubernetes requires careful consideration of Pod Disruption Budgets to maintain application availability. By understanding and appropriately configuring PDBs, scaling deployments, and planning evictions, you can avoid the error “Cannot evict pod as it would violate the pod’s disruption budget.” This guide provides a foundational understanding and practical steps to handle such scenarios effectively.

FAQs

1. How can I fix this pod eviction error?

To fix this error, you can either scale up the deployment to increase the number of available pods or temporarily adjust the PDB to lower the minimum required pods.

2. Can I change the Pod Disruption Budget to allow pod eviction?

Yes, you can modify the PDB by lowering the minAvailable value, which will reduce the required number of running pods, making it possible to evict a pod without violating the PDB.

3. What should I do if node draining is blocked by the PDB?

You can scale up the affected deployment to ensure more pods are available, or edit the PDB constraints temporarily, allowing the node to drain without violating the disruption budget.

4. How can I view the current PDB configuration in Kubernetes?

You can list PDBs with kubectl get pdb and check the details of a specific PDB using kubectl describe pdb pdb_name to review its current settings.