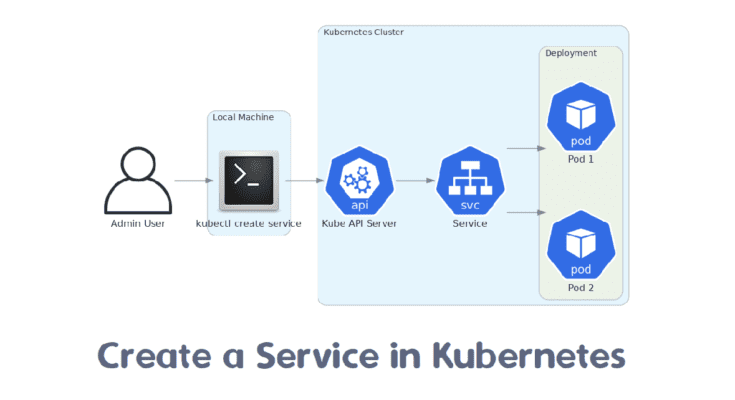

In Kubernetes, a Service is an abstraction that defines how to access a group of Pods. It provides a stable endpoint for network communication, even if the Pods change over time. Services help ensure that traffic is routed to the correct Pods.

This guide will walk you through the process of creating a service in Kubernetes with practical examples. We’ll cover different types of services and use cases to give you a comprehensive understanding.

Table of Contents

Types of Kubernetes Services

Kubernetes offers several types of services, each designed for different scenarios:

- ClusterIP: Exposes the service on a cluster-internal IP. The service is only reachable from within the cluster.

- NodePort: Exposes the service on each Node’s IP at a static port. A ClusterIP service, to which the NodePort service routes, is automatically created.

- LoadBalancer: Exposes the service externally using a cloud provider’s load balancer.

- ExternalName: Maps a service to a DNS name, not to a set of pods.

Creating a ClusterIP Service

A ClusterIP service is the default type of service in Kubernetes. It exposes the service on a cluster-internal IP, making it reachable only within the cluster.

1. First, we create a deployment configuration file named myapp-deployment.yaml for our application. Here’s the configuration:

apiVersion: apps/v1

kind: Deployment

metadata:

name: myapp

spec:

replicas: 3

selector:

matchLabels:

app: myapp

template:

metadata:

labels:

app: myapp

spec:

containers:

- name: myapp

image: nginx

ports:

- containerPort: 80This deployment creates three replicas of an application labeled myapp, running the nginx container on port 80.

2. Next, we define a ClusterIP service named myapp-service.yaml to expose the deployment:

apiVersion: v1

kind: Service

metadata:

name: myapp-service

spec:

selector:

app: myapp

ports:

- protocol: TCP

port: 80

targetPort: 80This service configuration exposes the myapp application within the cluster on port 80 using the default ClusterIP type.

3. Let’s apply the deployment and service configurations:

# kubectl apply -f myapp-deployment.yaml

# kubectl apply -f myapp-service.yaml 4. Check the deployment status:

# kubectl get deploymentsOutput.

NAME READY UP-TO-DATE AVAILABLE AGE

myapp 3/3 3 3 1m5. Check the service:

# kubectl get servicesOutput.

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

myapp-service ClusterIP 10.96.100.100 80/TCP 1m6. Verify that the service is accessible from within the cluster:

# kubectl run -it --rm --restart=Never busybox --image=busybox -- nslookup myapp-serviceThe above command creates a temporary pod in a Kubernetes cluster to perform a DNS lookup on a service named myapp-service. It is useful for debugging DNS issues within the cluster, as it verifies whether the myapp-service can be resolved by the Kubernetes DNS service to an IP address.

Server: 10.96.0.10

Address 1: 10.96.0.10 kube-dns.kube-system.svc.cluster.local

Name: myapp-service

Address 1: 10.96.100.100 myapp-service.default.svc.cluster.localCreating a NodePort Service

A NodePort service exposes the service on each Node’s IP at a static port. It also creates a ClusterIP service to route traffic.

1. First, create a NodePort service named myapp-service-nodeport.yaml with the following configuration.

apiVersion: v1

kind: Service

metadata:

name: myapp-service-nodeport

spec:

type: NodePort

selector:

app: myapp

ports:

- protocol: TCP

port: 80

targetPort: 80

nodePort: 30007This configuration creates a service that exposes the myapp application on each node’s IP at port 30007, internally routing traffic to port 80 on the pods.

2. Apply the above configuration to Kubernetes.

# kubectl apply -f myapp-service-nodeport.yaml3. Verify the NodePort service.

# kubectl get servicesOutput.

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

myapp-service-nodeport NodePort 10.96.200.200 80:30007/TCP 1m4. Verify that the service is accessible from the node’s IP:

# curl NodeIP:30007Replaced NodeIP with the IP address of the Kubernetes node. You will get the following output.

<code><!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

...

</head>

<body>

<h1>Welcome to nginx!</h1>

...

</body>

</html>Creating a LoadBalancer Service

A LoadBalancer service exposes the service externally using a cloud provider’s load balancer.

1. First, create a LoadBalancer service named myapp-service-loadbalancer.yaml with the following configuration.

apiVersion: v1

kind: Service

metadata:

name: myapp-service-loadbalancer

spec:

type: LoadBalancer

selector:

app: myapp

ports:

- protocol: TCP

port: 80

targetPort: 80This configuration creates a service that exposes the myapp application externally using the cloud provider’s load balancer.

2. Apply this configuration with kubectl command.

# kubectl apply -f myapp-service-loadbalancer.yaml3. Verify the LoadBalancer service.

# kubectl get servicesOutput.

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

myapp-service-loadbalancer LoadBalancer 10.96.150.150 192.168.10.10 80:30007/TCP 1m4. Verify that the service is accessible from the external IP once assigned by the cloud provider:

# curl ExternalIPReplaced ExternalIP with the IP address shown in the EXTERNAL-IP column. You will get the following output.

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

...

</head>

<body>

<h1>Welcome to nginx!</h1>

...

</body>

</html>Creating an ExternalName Service

An ExternalName service in Kubernetes maps a service to a DNS name. It allows you to use a Kubernetes service to access an external service outside the cluster via a standard DNS name, without creating endpoints or performing other configurations.

1. First, create a YAML file named myapp-service-externalname.yaml with the following configuration.

apiVersion: v1

kind: Service

metadata:

name: myapp-service-externalname

spec:

type: ExternalName

externalName: example.comThis configuration creates a service that maps to the DNS name example.com.

2. Apply the above configuration.

# kubectl apply -f myapp-service-externalname.yaml3. Verify the Externalname service.

# kubectl get servicesOutput.

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

myapp-service-externalname ExternalName example.com 1m4. Now, you can use the external-service to access example.com from within your Kubernetes cluster. Let’s create a temporary pod to test the DNS resolution:

# kubectl run -it --rm --restart=Never busybox --image=busybox -- nslookup myapp-service-externalnameOutput.

Server: 10.96.0.10

Address 1: 10.96.0.10 kube-dns.kube-system.svc.cluster.local

Name: myapp-service-externalname

Address 1: 93.184.216.34 example.comDelete a Service in Kubernetes

There are two ways you can delete a service in Kubernetes.

1. Delete a Service Using kubectl Command

Use the following command to delete a Service by name:

# kubectl delete service service-nameReplace service-name with the actual name of the Service you want to delete.

2. Delete a Service Using the YAML File

If you created the Service using a YAML file, you can delete it with this command:

# kubectl delete -f service.yamlReplace service.yaml with your YAML service file name.

3. Verify Deletion

To confirm the Service has been deleted, run:

# kubectl get servicesThis will list all remaining Services in the cluster.

Conclusion

Creating services in Kubernetes is essential for managing how your applications communicate within and outside your cluster. By using different service types like ClusterIP, NodePort, and LoadBalancer, you can control how your application is exposed within and outside of the cluster.

FAQs

1. What is the default type of Service in Kubernetes?

The default Service type is ClusterIP, which is only accessible within the cluster.

2. How do I expose a Service externally in Kubernetes?

To expose a Service externally, use the LoadBalancer or NodePort Service type. For example, LoadBalancer makes the service accessible over the internet.

3. Can a Service route traffic to multiple Pods?

Yes, a Service can load balance traffic across multiple Pods that match the selector labels specified in the Service definition.

4. Can I create a Service without a selector?

Yes, you can create a Service without a selector. This is useful for manually specifying the endpoints or for external services.