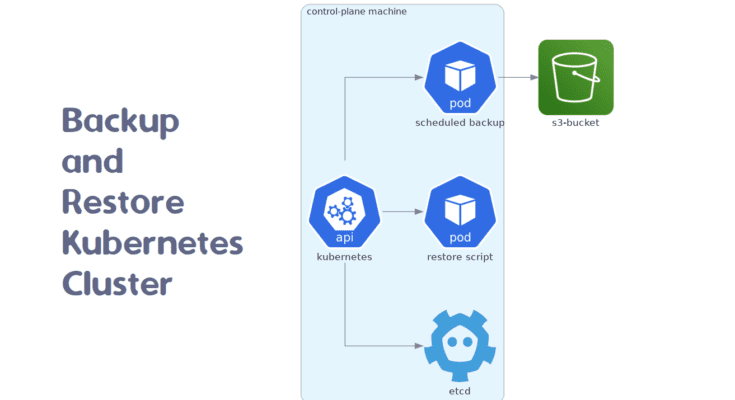

Backing up and restoring a Kubernetes cluster is essential for maintaining stability. It ensures that your cluster can recover from failures or data loss. In production environments, unexpected issues can lead to outages or corrupted data. A proper backup strategy helps you avoid downtime and quickly restore services.

In this article, we’ll explore how to back up and restore your Kubernetes cluster. You will learn two key methods: using etcd snapshots and Velero. Both methods ensure your cluster is protected and can be restored efficiently.

Table of Contents

Backing Up Kubernetes with etcd

Let’s first look at how to back up Kubernetes using etcd. This method captures the state of the Kubernetes cluster.

Step 1: Install etcdctl

First, ensure that etcdctl is installed on your master node. You can install etcdctl by downloading it from the official etcd release page. Here’s a command to do that:

# wget -q --show-progress --https-only --timestamping "https://github.com/etcd-io/etcd/releases/download/v3.5.0/etcd-v3.5.0-linux-amd64.tar.gz"

# tar -xvf etcd-v3.5.0-linux-amd64.tar.gz

# mv etcd-v3.5.0-linux-amd64/etcdctl /usr/local/bin/ Step 2: Access etcd

To begin with, we need to access the etcd cluster. Typically, etcd runs on the master nodes. SSH into one of your master nodes using the following command:

# ssh user@master-nodeStep 3: Take a Snapshot

Next, use the etcdctl command-line tool to take a snapshot of your etcd cluster.

# ETCDCTL_API=3 etcdctl snapshot save snapshot.db \

--endpoints=https://127.0.0.1:2379 \

--cacert=/etc/kubernetes/pki/etcd/ca.crt \

--cert=/etc/kubernetes/pki/etcd/server.crt \

--key=/etc/kubernetes/pki/etcd/server.keyAfter running this command, you should see an output indicating the snapshot has been saved.

{"level":"info","ts":...,"caller":"snapshot/v3_snapshot.go:69","msg":"saved","path":"snapshot.db"}Step 4: Verify the Snapshot

Now, verify the integrity of the snapshot to ensure it was taken correctly.

# ETCDCTL_API=3 etcdctl snapshot status snapshot.dbThe output will show the snapshot status:

{"level":"info","snapshot":{"revision":...,"totalKey":...,"totalSize":"..."}}Step 5: Store the Snapshot

Finally, store the snapshot file securely, such as a remote server or cloud storage. Here’s an example using scp to transfer the file:

# scp snapshot.db user@backup-server:/backup-dirStoring etcd Snapshot to Persistent Volume

Storing the etcd snapshot directly to a Persistent Volume (PV) can be a convenient and secure way to manage your backups. Here’s how you can do it:

Step 1: Create a Persistent Volume

Create a file named pv.yaml.

apiVersion: v1

kind: PersistentVolume

metadata:

name: etcd-backup-pv

spec:

capacity:

storage: 10Gi

accessModes:

- ReadWriteOnce

hostPath:

path: "/mnt/data/etcd-backup"Apply the PV configuration:

# kubectl apply -f pv.yamlVerify the PV status:

# kubectl get pv etcd-backup-pvYou should see output similar to this:

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

etcd-backup-pv 10Gi RWO Retain Available standard 10sStep 2: Create a Persistent Volume Claim and bind to the PV

Create a file named pvc.yaml.

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: etcd-backup-pvc

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 10GiApply the PVC configuration:

# kubectl apply -f pvc.yamlVerify the PVC status:

# kubectl get pvc etcd-backup-pvcYou should see output similar to this:

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

etcd-backup-pvc Bound etcd-backup-pv 10Gi RWO 10sStep 3: Mount the PVC in a Pod to store the etcd snapshot

Create a pod.yaml file.

apiVersion: v1

kind: Pod

metadata:

name: etcd-backup-pod

spec:

containers:

- name: etcd-backup-container

image: busybox

volumeMounts:

- mountPath: "/backup"

name: etcd-backup-volume

command: ["/bin/sh", "-c", "sleep 3600"]

volumes:

- name: etcd-backup-volume

persistentVolumeClaim:

claimName: etcd-backup-pvcApply the Pod configuration:

# kubectl apply -f pod.yamlVerify the Pod status:

# kubectl get pods etcd-backup-podOutput.

NAME READY STATUS RESTARTS AGE

etcd-backup-pod 1/1 Running 0 10sStep 4: Take a Snapshot and Store it in the PVC.

# kubectl exec -it etcd-backup-pod -- sh -c "ETCDCTL_API=3 etcdctl snapshot save /backup/snapshot.db --endpoints=https://127.0.0.1:2379 --cacert=/etc/kubernetes/pki/etcd/ca.crt --cert=/You should see output similar to:

{"level":"info","ts":...,"caller":"snapshot/v3_snapshot.go:69","msg":"saved","path":"/backup/snapshot.db"}Now, your etcd snapshot is stored in the Persistent Volume and can be restored later.

Restoring Kubernetes with etcd

When it’s time to restore, follow these steps.

Step 1: Stop the etcd Service

First, stop the etcd service on all master nodes:

# systemctl stop etcdStep 2: Restore the Snapshot

Next, restore the etcd data from the snapshot:

# ETCDCTL_API=3 etcdctl snapshot restore snapshot.db --data-dir=/var/lib/etcdThe output will confirm the restoration:

{"level":"info","msg":"restored snapshot","path":"snapshot.db","data-dir":"/var/lib/etcd"}Step 3: Update etcd Configuration

If necessary, update the etcd configuration. Ensure the –data-dir points to the restored directory.

Step 4: Restart etcd and Kubernetes Services

Finally, restart the etcd service and other Kubernetes services:

# systemctl start etcd

# systemctl restart kube-apiserver kube-controller-manager kube-scheduler Using Velero for Kubernetes Backup and Restore

Now, let’s use Velero, a tool designed specifically for backing up and restoring Kubernetes cluster resources and persistent volumes.

Step 1: Install Velero

First, install the Velero CLI on your local machine and deploy Velero to your cluster. Download and install Velero:

# curl -LO https://github.com/vmware-tanzu/velero/releases/download/v1.7.1/velero-v1.7.1-linux-amd64.tar.gz

# tar -xvf velero-v1.7.1-linux-amd64.tar.gz

# mv velero-v1.7.1-linux-amd64/velero /usr/local/bin/ Next, deploy Velero to your cluster, configuring it to use your chosen storage provider (e.g., AWS S3, Google Cloud Storage):

# velero install --provider aws --bucket velero-backups --secret-file ./credentials-velero --use-resticStep 2: Configure Backup Storage

Make sure your backup storage is correctly configured. This involves setting up credentials and ensuring Velero has access to the storage location.

Step 3: Create a Backup

Now, let’s create a backup of your Kubernetes cluster using Velero:

# velero backup create my-cluster-backup --include-namespaces default,kube-systemAfter running this command, you will see the status of the backup creation:

Backup request "my-cluster-backup" submitted successfully.

Run `velero backup describe my-cluster-backup` or `velero backup logs my-clusterStep 4: Verify the Backup

To verify the backup status and ensure it has been completed successfully, run:

# velero backup describe my-cluster-backup --detailsThis command will show detailed information about the backup:

Name: my-cluster-backup

Namespace: velero

Labels:

Annotations:

Phase: Completed

Namespaces:

Included: default, kube-system

Excluded:

Resources:

Included: *

Excluded:

Cluster-scoped: auto

...

Persistent Volume Claims:

Snapshots: Step 5: Restore from a Backup

When you need to restore from a backup, use the following command:

# velero restore create --from-backup my-cluster-backupYou will see the restoration process starting:

Restore request "restore-1" submitted successfully.

Run `velero restore describe restore-1` or `velero restore logs restore-1` for more details.Conclusion

Backing up and restoring Kubernetes clusters is vital for disaster recovery and high availability. By following these steps, you can regularly back up your cluster and restore it in case of data loss or corruption. Whether you use etcd snapshots for the cluster state or Velero for complete backups, these practices will safeguard your Kubernetes environments.

FAQs

1. Can I automate etcd backups in Kubernetes?

Yes, you can automate etcd backups using scripts and cron jobs that regularly take snapshots and store them in secure locations, such as persistent volumes or cloud storage.

2. What storage providers are compatible with Velero?

Velero supports various storage providers, including AWS S3, Google Cloud Storage, Microsoft Azure, and other S3-compatible storage solutions.

3. Can I back up specific namespaces in Kubernetes using Velero?

Yes, Velero allows you to back up specific namespaces by using the --include-namespaces flag in the backup command, which limits the scope of the backup to selected namespaces.

4. How do I restore only persistent volumes with Velero?

To restore only persistent volumes, you can use Velero’s --include-resources flag, specifying the resource type persistentvolumes, or include it in a broader restore operation.